Table of Contents

The global shift toward real-time path tracing is not merely a hardware upgrade; it is a fundamental redirection of how we communicate spatial volume. As urban densities in hubs like Singapore reach unprecedented levels of complexity, the traditional “flythrough” fails to capture the metabolic rhythm of the city. We are moving toward an era where architectural animation is no longer a static presentation but a high-fidelity simulation of lived experience.

Nuvira Perspective

At Nuvira Space, we view the intersection of human intuition and machine intelligence as the ultimate design frontier. We recognize that the gap between digital intent and architectural reality is closed not by higher pixel counts, but by the synthesis of real-time engines and physics-based light simulation. Our authority is built on mastering the “ghost in the machine”—leveraging GPU-accelerated ray tracing to ensure that every photon behaves as it would in the physical world, turning cold geometry into a persuasive, human-centric narrative.

The Death of the Legacy Walkthrough: A Disruptive Analysis

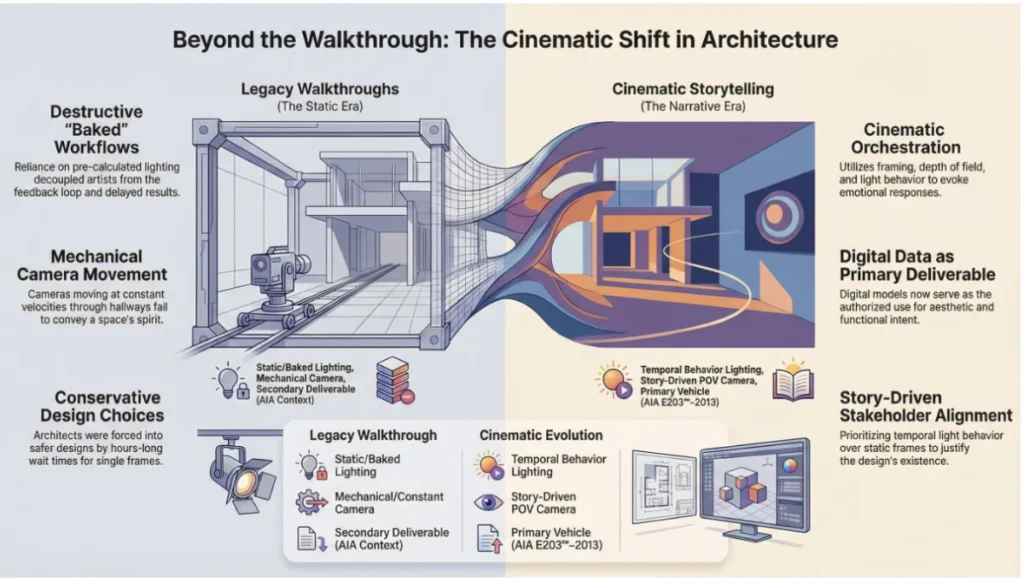

Legacy workflows relied on “baking” lighting—a destructive, time-consuming process that decoupled the artist from the feedback loop. In the past, architects were forced to wait hours for a single frame, leading to “conservative” design choices. By shifting to a cinematic, story-driven approach, we prioritize temporal light behavior over static frames.

This is a critical evolution recognized by the American Institute of Architects (AIA) in their Digital Practice guides, which emphasize that digital data is no longer a secondary deliverable but the primary vehicle for stakeholder alignment. Specifically, AIA Document E203™–2013 outlines how digital models serve as the “authorized use” for aesthetic and functional intent.

The “walkthrough” is dead because it lacks a point of view. A mechanical camera moving at a constant velocity through a hallway conveys nothing about the spirit of the space. In contrast, cinematic storytelling utilizes the principles of cinematography—framing, depth of field, and light orchestration—to evoke an emotional response that justifies the design’s existence.

Step-by-Step Workflow: The Nuvira Cinematic Pipeline

1. Pre-Visualization and Camera Blocking

Before a single shader is applied, you must establish the “Visual Script.” Stop using linear paths.

- Camera Logic: Use 35mm or 50mm prime equivalents to mimic human ocular focus. Prime lenses create a sense of realism that zoom lenses often lack in CG environments.

- Z-Depth Scripting: Map your focal planes to highlight structural junctions or material transitions. This is where smart glass technology can be showcased effectively, transitioning from opaque to transparent to reveal interior programming.

- The “Weight” of the Camera: In your sequencer, add a subtle noise modifier to the camera’s transform to simulate the micro-tremors of a handheld rig or a physical gimbal. This “imperfection” grounds the viewer in reality.

- Technical Spec: Set your

Field of View (FOV)between 35 and 45 for interior intimacy; avoid the “fisheye” distortion of 18mm wide-angles that break spatial scale.

2. Global Illumination (GI) and Ray-Tracing Parameters

In engines like Unreal Engine 5.4 or Vantage, GI is the heartbeat of the scene.

- Lumen Scene Detail: Increase

Lumen Scene Lighting Qualityto 4.0 to eliminate light leaking in thin-walled geometry. - Hardware Ray Tracing (HWRT): Enable

Ray Traced Shadowswith a high sample count per pixel (at least 16spp) to achieve soft, physically accurate penumbras. - Reflections & Translucency: Implement “Ray Traced Translucency” rather than raster-based methods. This is essential for accurate light refraction through water features or glass facades.

- Reflection Bounces: Set max reflection bounces to 2. This is critical for glass-heavy facades where “recursive reflections” define the building’s silhouette.

3. Temporal Post-Production

Real-world value is created in the final 10% of the process.

- Motion Blur: Implement a shutter angle of 180 degrees. This provides the “cinematic” look by blurring fast-moving foreground elements while keeping the architecture crisp.

- Tone Mapping: Use ACES (Academy Color Encoding System) to ensure the dynamic range from high-noon sun to deep interior shadows doesn’t lose data in the blacks or blow out the whites.

- Color Grading: Avoid high-saturation “video” looks. Use a LUT (Look-Up Table) that mimics Kodak 500T film stock to provide a professional, architectural editorial feel.

Comparative Analysis: Nuvira vs. Industry Standard

| Feature | Industry Standard (Legacy) | Nuvira Disruptive Workflow |

|---|---|---|

| Lighting | Pre-baked lightmaps (Static) | Real-time Path Tracing (Dynamic) |

| Iteration | 24-hour render wait times | Real-time viewport feedback |

| Narrative | Mechanical “Fly-through” | Cinematic sequences with scripted “beats” |

| Materiality | Low-res tiled textures | 8K Scanned Megascans with micro-displacement |

| Output | Flat MP4 Video | Interactive, multi-platform 4K simulation |

The AIA Evidence-Based Approach: Communication and Digital Delivery

In line with the AIA Document E203™–2013 (Building Information Modeling and Digital Data Exhibit), the transition to cinematic animation serves a legal and professional purpose. High-fidelity visualization reduces ambiguity—the primary cause of litigation in construction. When a client can see the exact interaction of light on a kinetic architecture facade, the visualization acts as a binding agreement on aesthetic intent.

Furthermore, AIA’s Guide to Building Performance highlights that 3D visualization is no longer just for marketing; it is for performance simulation. Visualizing how vertical forests impact local cooling and humidity requires a cinematic approach to show the growth cycles and seasonal changes that static renders ignore.

Advanced Simulation: Neuroarchitecture and Spatial Impact

Cinematic animation allows us to explore “Neuroarchitecture”—the study of how the built environment affects the human brain. By utilizing “Physically Based Rendering” (PBR) and accurate lighting, we can simulate the “Circadian Rhythm” impact of a design.

- Temporal Light Simulation: Animate a 24-hour cycle in 60 seconds. Observe how shadows move through a living area.

- Material Resonance: Use high-fidelity audio (Spatial Audio) in your animations to simulate the acoustic dampening of different materials. This provides a multi-sensory validation of the design.

Concept Project Spotlight: Speculative / Internal Concept Study — “The Vertical Canopy” by Nuvira Space

Project Overview: Location / Typology / Vision

This study explored the integration of a massive vertical forest within the dense urban fabric of Singapore’s Marina South. The goal was to simulate the dappled sunlight (Komorebi) through 200 species of tropical flora.

Design Levers Applied

- Subsurface Scattering (SSS):

- Applied to all leaf geometry.

- Technical Spec:

Scattering Radiusset to 0.2 to allow light to penetrate foliage, creating the translucent “glow” seen in nature.

- Volumetric Fog (Exponential Height Fog):

- Used to visualize the humidity of the Singaporean climate.

- Technical Spec:

Volumetric Scattering Intensityset to 0.8 to catch god-rays between the structural columns.

- Particle Simulation:

- Niagara-based dust motes and falling petals to add “micro-motion” to otherwise static shots.

- Wind Dynamics:

- Used a “Simple Grass Wind” node globally, synced to a procedural noise wind controller to ensure all vegetation moves in a unified, believable direction.

Transferable Takeaway

Architecture is never static. By introducing “secondary motion” (wind in trees, shifting clouds), you validate the scale and atmosphere of the building in ways a static image cannot. This validates the project’s viability in high-density urban environments like Singapore, where biophilic integration is a requirement, not a luxury.

Intellectual Honesty: Hardware Check

You cannot run a disruptive cinematic workflow on consumer-grade laptops.

- GPU: Minimum NVIDIA RTX 4090 (24GB VRAM) for local path tracing.

- CPU: 16-core minimum (Threadripper or i9) to handle the geometry streaming and Niagara simulations.

- VRAM Management: Keep your scene under 18GB to avoid “out of memory” crashes during high-res 4K export.

- Storage: NVMe M.2 SSDs (Gen 4/5) are mandatory for streaming the high-bitrate textures required for 8K close-ups.

2030 Future Projection: The End of “Rendering”

By 2030, the concept of a “render button” will be obsolete. We are moving toward “Persistent Virtual Twins.” Architectural animations will be live streams from a 1:1 digital replica of the city. Clients will not watch a video; they will inhabit a live simulation where weather, occupancy, and aging are simulated in real-time via neural radiance fields (NeRFs) and Gaussian Splatting. The convergence of AI and real-time engines will allow architects to modify geometry on the fly during client presentations, with global illumination updating in milliseconds. We will move from “creating videos” to “curating realities.”

Secret Techniques: Advanced User Guide

To achieve the “Nuvira Look,” you must manipulate the “Imperfect Reality.”

- Chromatic Aberration (Subtle): Apply a 0.05 value in post to mimic real camera lens fringes.

- Film Grain: A jittered 0.1 grain prevents the “too clean” digital look of CG.

- The “Slow-In/Slow-Out” Rule: Never use linear keyframes for camera movement. Use Bezier curves with a long “ease-in” to mimic the weight of a physical camera dolly.

- Lens Flare Logic: Only use flares if the camera is looking directly at a high-intensity light source. Procedural flares should be used sparingly—subtlety is the hallmark of high-end production.

Comprehensive Technical FAQ

General Animation Strategy

Q: How do I reduce “flicker” in real-time architectural animations?

- A: Flicker usually stems from Temporal Anti-Aliasing (TAA) or low samples in GI.

- Increase

Temporal Super Resolution (TSR)to 100%. - Ensure

Shadow Map Methodis set to Virtual Shadow Maps (VSM) for high-frequency detail. - In the movie render queue, use “Spatial Samples” (at least 8) and “Temporal Samples” (at least 8) to smooth out frame-to-frame noise.

- Increase

Q: Is 60FPS better than 24FPS for architectural visuals?

- A: No. For cinematic storytelling, 24FPS or 30FPS is superior. 60FPS feels like a video game (overly smooth), which can detach the viewer from the emotional narrative. Use 24FPS with 1/48 shutter speed for a professional feel.

Material & Lighting Specifics

Q: My glass looks like a mirror. How do I fix the transparency?

- A: You likely have

Speculartoo high andRefractiontoo low. SetIOR (Index of Refraction)for glass to 1.52. Use a “Thin Translucent” shader for windows to allow light transmission while maintaining surface reflections. Ensure yourTranslucency Methodis set to Ray Tracing in the post-process volume.

Q: How do I handle large-scale landscape flickering in the background?

- A: This is often “Z-fighting” or LOD (Level of Detail) popping. Set your

LOD Distance Scaleto a higher value (e.g., 2.0 or 4.0) to force high-detail meshes to stay active at a distance.

Secure Your Project’s Future

If your current visual output feels like a digital ghost town, it’s because you are missing the narrative thread. At Nuvira Space, we don’t just render buildings; we simulate the future of human habitation.

Ready to transition from static frames to cinematic reality? Contact our Visual Lab today to see how we can apply these disruptive workflows to your next development.