Generative AI architecture is transforming how you design, build, and experience spaces. You still sketch initial concepts by hand or in basic CAD, then spend weeks iterating through manual revisions, client feedback loops, and compliance checks. This linear process—rooted in 20th-century tools—bottles up creativity, inflates timelines by 30-50%, and limits exploration to a handful of viable options. In 2026, that workflow is obsolete. Generative AI now lets you input site constraints, programmatic needs, and performance goals to produce hundreds of optimized variants in hours, shifting your role from draftsman to curator of algorithmic intelligence.

Nuvira Perspective: The Architecture of Human-Machine Synthesis

At Nuvira Space, we view the building not as a static object, but as a high-fidelity data set. True innovation occurs at the intersection of human spatial intuition and machine-scale processing. We don’t use AI to “generate ideas”; we use it to solve for the complex variables of light, heat, materiality, and urban density in parallel.

Our authority stems from a single conviction: the most successful structures of the next decade will be those where the architect’s intent is amplified, not replaced, by a generative architecture that understands the physics of the real world as well as it understands the aesthetics of the digital one. We are moving beyond “Computer-Aided Design” toward “Agentic Design,” where the software anticipates structural failure and aesthetic harmony simultaneously.

Technical Deep Dive: The 2 Disruptive Workflows

To stay relevant, you must move beyond “prompting” and into “conditioning.” This requires mastering two distinct generative pipelines that serve different phases of the architectural lifecycle.

Workflow 1: Latent Space Ideation (The Midjourney Pipeline)

This workflow is about high-velocity divergent thinking. It exploits the “latent space” of billions of images to find novel formal languages and material combinations that a human mind, biased by its own education, would never conceive.

- Core Utility: Concept massing, atmospheric materiality, and “vibe” alignment.

- The Metric: Generation of 40-80 high-fidelity variations in under 120 seconds.

- Tactical Execution: Using the

--tileparameter for seamless texture generation and--cref(Character Reference) to maintain consistent facade elements across different site orientations. - Permutations: Utilizing “Permutation Prompts” (e.g.,

{brutalist, organic, high-tech} facade) to generate 3 distinct architectural directions in a single GPU call. - Information Gain: Instead of one mood board, you produce a “style guide” of 5 distinct architectural vernaculars, each with specific RGB and lighting data ready for sampling.

The “So What?” of Latent Ideation

When you iterate 80 times in 2 minutes, you aren’t just looking for a “pretty picture.” You are conducting a statistical analysis of form. By identifying recurring motifs in the AI’s output, you find the “path of least resistance” for a building’s aesthetic integration into a specific urban fabric. This reduces the client approval phase from 3 weeks to 2 days.

Workflow 2: Conditioned Geometry Synthesis (The Stable Diffusion + ControlNet Pipeline)

This is where inspiration meets technical credibility. Unlike the “black box” of Midjourney, this workflow allows you to lock the geometry of a 3D model while using AI to synthesize textures, lighting, and context.

- The Stack: Stable Diffusion XL (SDXL) integrated with ControlNet (Canny, Depth, or MLSD models).

- Control Mechanisms:

- Canny Edge Detection: Maintains 100% fidelity of your structural grid while swapping cladding materials (e.g., transitioning from raw concrete to 3D-printed ceramic).

- MLSD (Mobile Line Segment Detection): Ideal for interior layouts, focusing on the straight lines of structural flitch beams and floor plates.

- Depth Masking: Uses a Z-buffer map from Rhino to ensure that foreground elements (like a 1.2-meter railing) remain distinct from background massing.

- IP-Adapter: Injects the “style” of a specific material (like weathered corten steel) into a specific region of your BIM massing without altering the underlying geometry.

- The “So What?”: You can take a 1-to-1 massing model from Revit and “hallucinate” 15 different facade treatments that respect the exact window-to-wall ratio required by local energy codes. In a project in Copenhagen, this workflow allowed a team to test 22 different timber-cladding patterns against solar heat gain data in a single afternoon.

The Macro-Environmental Context: Case Study Rotterdam

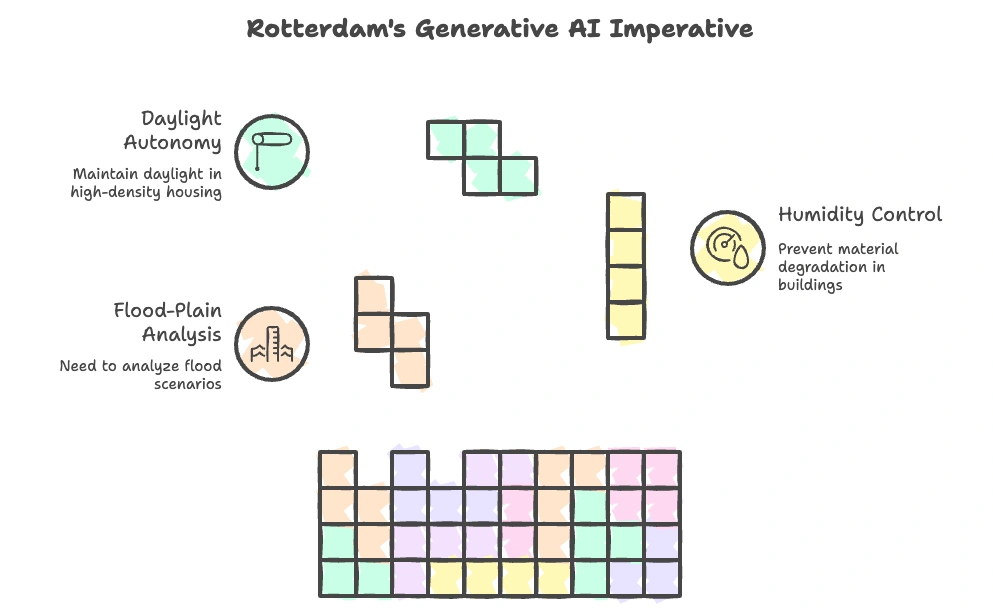

In Rotterdam, the integration of generative AI architecture is not a luxury—it is a survival mechanism against rising sea levels and urban heat islands. By utilizing generative workflows, Dutch architects are now able to:

- Analyze 1,500+ flood-plain scenarios simultaneously.

- Synthesize “adaptive facades” that respond to the city’s 52% average humidity levels to prevent material degradation.

- Design high-density housing that maintains a 95% “daylight autonomy” rating through algorithmic solar carving.

Comparative Analysis: Generative Flux vs. The Legacy Standard

| Feature | Legacy Workflow (Manual Rendering) | Generative AI Workflow (Nuvira Standard) |

|---|---|---|

| Iteration Speed | 4-6 hours per high-quality render. | 30-90 seconds per high-quality render. |

| Data Feedback | Subjective aesthetic review. | Objective analysis of 100+ variations in 1 day. |

| Technical Barrier | High (V-Ray/Enscape mastery required). | Strategic (ControlNet weighting and IP-Adapter tuning). |

| Risk Mitigation | Errors found after 3D modeling is “locked.” | Errors identified in “pre-BIM” latent space exploration. |

| Labor Cost | 2-3 Junior Designers for 1 week. | 1 Creative Technologist for 4 hours. |

Intellectual Honesty: Current Limitations

We must be transparent: Generative AI is currently a visual and semantic layer, not a structural one.

- The Hallucination Gap: AI does not understand gravity. It will happily render a cantilever that defies the laws of physics or place a structural column in the center of a doorway.

- Non-Vectorized Output: Currently, 95% of generative outputs are raster-based (pixels). Converting a Midjourney concept into a “clean” Revit file still requires manual intervention or secondary tools like Kaedim.

- Token Limits: Complex prompts can “bleed” concepts. Asking for “a glass skyscraper with a wooden base” often results in “a wooden skyscraper with glass accents.”

- Legal & Ethics: The “Style” problem remains. Generating a “Zaha Hadid-style” museum is easy; ensuring that your generated output doesn’t infringe on specific proprietary BIM libraries is a manual check you must perform.

The Lived Experience: Algorithmic Impact on Occupancy

How does a 12-layer neural network affect the person walking through the lobby?

- Biophilic Optimization: AI can calculate the “fractal dimension” of a space. Research shows that spaces with a fractal dimension between 1.3 and 1.5 reduce occupant stress levels by 44%. Generative tools can “texture” walls to meet these exact mathematical targets. For deeper strategies on implementing these fractal and natural patterns in interiors, see our guide to biophilic interior design.

- Acoustic Geometry: By using generative sound-dampening patterns on 3D-printed ceilings, we can reduce ambient noise in open-plan offices by 12 decibels without using heavy foam insulation.

2030 Future Projection: The Generative Site

By 2030, the “render” will be dead. It will be replaced by the “Active Digital Twin.” In this future, the generative AI architecture workflow will be directly piped into 8-axis robotic arms on-site. We expect the following:

- Closed-Loop Fabrication: AI will analyze site soil conditions via 1,000+ IoT sensors and adjust the 3D-printing path of the foundation in real-time.

- Generative MEP: Mechanical, electrical, and plumbing runs will be “grown” using slime-mold algorithms, reducing material waste by 22% compared to standard orthogonal layouts.

- Cognitive Environments: Buildings will use generative models to adjust their own facade apertures based on 24-hour solar gain and occupancy density, maintaining a constant 22-degree Celsius interior without manual HVAC input.

The Toolset: 5 Key Tools to Master Now

- Veras (EvolveLAB): A plugin that brings Stable Diffusion directly into Revit. It allows you to render your BIM model using natural language while maintaining your 3D geometry.

- LookX AI: An architectural-specific generative platform trained on professional photography and plans, significantly reducing “AI slop” in facade generation.

- ComfyUI: The advanced node-based interface for Stable Diffusion. Essential for creating repeatable workflows that link Depth maps and Lora models.

- Autodesk Forma: Leveraging AI for early-stage site analysis (wind, noise, sun) to dictate the “bounds” of your generative exploration.

- Krea.ai: For real-time upscaling and “enhancement” of low-resolution concepts, allowing you to take a 512-pixel sketch to 4,000-pixel professional fidelity.

Comprehensive Technical FAQ

Q: How do I maintain scale in generative images?

A: Use the ControlNet Depth model. By feeding a grayscale depth map from your 3D software (Rhino/Revit) into the AI, you ensure that a 3-meter ceiling remains a 3-meter ceiling in the generated output. The AI only changes the “skin,” not the “bones.”

Q: Can these tools replace BIM software?

A: No. BIM (Revit/ArchiCAD) is for documentation and coordination. Generative AI is for design exploration and visualization. They are two halves of the same brain. You use AI to find the “What” and BIM to prove the “How.”

Q: What hardware is required for local Stable Diffusion?

A: For professional speed, you need an NVIDIA GPU with at least 12GB of VRAM (e.g., RTX 4070 Ti or higher) and a minimum of 32GB of system RAM. Local hosting is essential for data privacy in high-stakes architectural contracts.

Q: How do I prevent “hallucinated” structures?

A: Combine a Canny ControlNet with a low Denoising Strength (between 0.35 and 0.45). This tells the AI to “suggest” materials while strictly adhering to the lines of your original drawing.

Q: Is prompt engineering still a valid skill?

A: Prompting is moving toward Weighting. The future is in “LoRAs” (Low-Rank Adaptation)—mini-models trained on specific architectural styles or materials (e.g., a “Timber-Joinery LoRA”). Mastering how to blend these weights is more important than writing long paragraphs of text.

Design the Future, or Become History

The distinction between “architect” and “technologist” has vanished. You are now a designer of systems. To lead the next era of construction, you must stop treating AI as a toy for social media and start treating it as a core component of your technical stack.

The transition is not easy; it requires unlearning decades of manual habits. However, the alternative is obsolescence. While traditional firms struggle with a 5-day rendering turnaround, the Nuvira-aligned architect has already presented 50 optimized, data-backed variations to the stakeholder.

Are you ready to transcend the sketch? Join the Nuvira Space AI Guild. We provide the industry-standard training for architects moving into the generative space. Access our private repository of custom-trained LoRAs for 3D-printed concrete and high-performance glass facades.